What Is RAG in 2025? A Practical, No-Hype Guide with a Simple Demo

By ToolsifyPro Editorial

- AI

- No-Code

A practical 2025 guide to Retrieval-Augmented Generation (RAG): how it works, today’s best practices, and a simple no-code demo you can try.

Overview

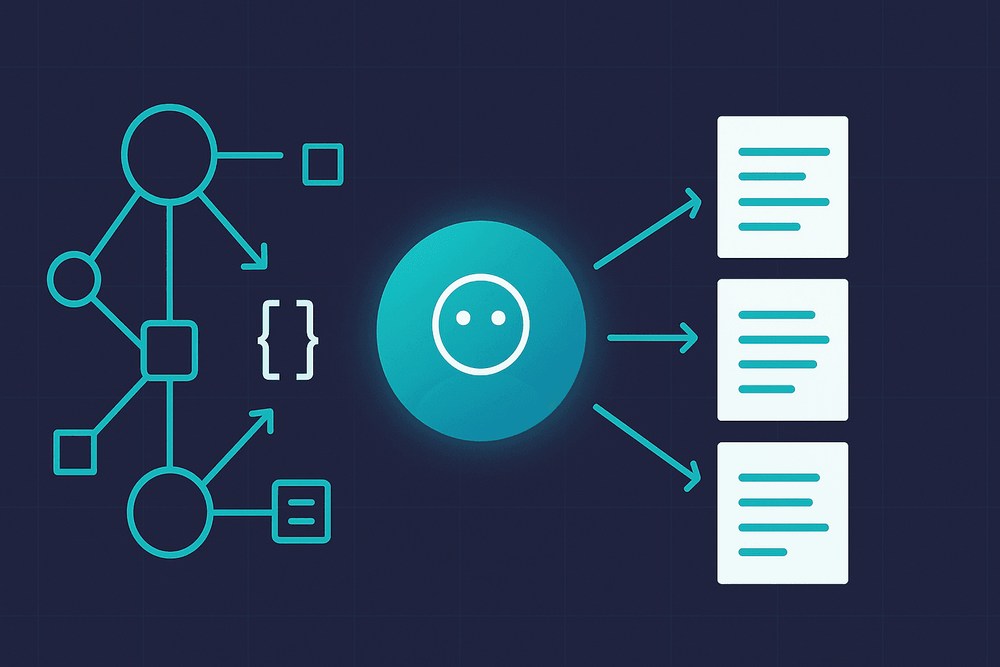

Retrieval-Augmented Generation (RAG) lets large language models answer with your sources—PDFs, policy docs, spreadsheets, wikis—without retraining the model. Instead of hoping the model “remembers” facts, you retrieve the most relevant snippets at question time and augment the prompt with them so the model can ground its answer. That single design choice is why RAG has become the default way to ship reliable AI features in 2025: faster iteration, lower cost than heavy fine-tuning, and answers that can cite where they came from. OpenAI Cookbook

This guide demystifies RAG today: how it works, what’s changed in 2025, a copy-and-paste prompt template, and a no-code mini-demo you can try right now. If you’re building for customers or internal teams, you’ll find production-grade checklists you can run this week.

What exactly is RAG (in plain English)?

Think of RAG as an open-book exam for your AI: when a user asks something, your system quickly flips through the right pages, inserts those passages into the model’s context, and then asks the model to answer using only those pages. It’s still generative, but grounded. In 2025, RAG is widely used across support, analytics Q&A, and policy assistants because it narrows the model’s scope and reduces “hallucinations” by citing sources. WIRED

Core steps:

Ingest your data (PDFs, web pages, CSVs, tickets).

Chunk it into small, meaningful pieces.

Embed chunks into vectors and store them in a vector index.

Retrieve the most similar chunks at question time.

(Optional but powerful) Rerank the retrieved set to bring the best to the top.

Generate an answer with the model, strictly using the retrieved context.

Evaluate & log queries, selected chunks, and feedback—so quality improves over time.

What’s new in 2025 (and why it matters)

Smarter chunking: Teams increasingly use context-aware and adaptive chunking rather than fixed sizes. This reduces truncation and boosts retrieval precision. Pinecone Stack Overflow Blog

Better embeddings: Multimodal and high-dimensional text embeddings (e.g., OpenAI’s Text-Embedding-3 series) have improved recall and multilingual coverage; picking a modern model often outperforms tuning old pipelines. OpenAI Platform

Reranking goes mainstream: Cross-encoders like Cohere Rerank v3.5 are now widely available (Bedrock, Azure/marketplaces), giving a big lift to answer quality—especially on nuanced, constraint-heavy questions. Cohere Documentation Amazon Web Services, Inc.

Graph-aware retrieval (GraphRAG): For messy corpora (policies, org charts, multi-doc narratives), extracting entities/relations and retrieving over that graph increases coherence and reduces missed links. Microsoft

Postgres-native vectors: pgvector keeps vectors next to your transactional data, simplifying ops and enabling hybrid filters with SQL you already know. GitHub

Architecture you can trust (from zero to first answer)

Ingest → Chunk → Embed → Index → Retrieve → Rerank → Generate → Evaluate

Ingest. Normalize files to text; keep source metadata (title, URL, section, timestamp).

Chunk. Start with 200–400 tokens with ~10–20% overlap; prefer semantic or adaptive splitting over naive length-only rules where possible. Logs should record the pre- and post-chunk sizes. Pinecone Medium

Embed. Use a current, high-quality embedding model with stable versioning (e.g., text-embedding-3-large). Track model name + version in metadata for reproducibility. OpenAI Platform

Index. Choose a vector index appropriate to your stack: managed (Pinecone/Weaviate) or self-hosted (pgvector, FAISS). For simple stacks, pgvector inside Postgres cuts infra complexity. GitHub

Retrieve. Start with k=20 using cosine similarity. Add hybrid search (BM25 + vectors) when your queries include exact terms or IDs.

Rerank (Recommended). Feed the top-k candidates to a cross-encoder (e.g., Cohere Rerank v3.5) and keep the top 5–8 passages; this consistently improves answer quality on tricky questions. Cohere Documentation

Generate. Use strict instructions: “Answer only from the context; if missing, reply ‘Not in the provided context.’ Include citations.”

Evaluate. Track: answer helpfulness, citation accuracy, time-to-first-token, retrieval precision@k, and containment (was the needed fact actually in the retrieved set?).

Simple no-code demo (try this before you build)

You can simulate a RAG loop without writing a backend:

Prepare context: Pick 3–5 PDFs (e.g., product manuals). Convert to text (scan pages → OCR if needed).

Split: Use a free splitter to create ~300-token chunks with 15% overlap.

Embed & search: Use a hosted embedding notebook or managed vector DB’s web UI to upload chunks and test queries.

Rerank: Paste the top 20 results into a reranker playground (Cohere Rerank) and keep the top 5. Cohere Documentation

Ask the LLM: In a chat UI, paste the 5 chunks as Context and your user question below.

Verify citations: Check each claim maps to a chunk “Source” line. If not, adjust chunk size or add missing docs.

This quick exercise gives you a feel for chunk sizes, retrieval quality, and what users actually ask—before you commit to code.

A minimal, reusable prompt template SYSTEM You are a precise, factual assistant. Use the context EXACTLY as written. If the answer is not contained in the context, say: “Not in the provided context.”

CONTEXT {{top_chunks_with_titles_and_ids}}

USER QUESTION {{question}}

RESPONSE RULES

- Cite the specific chunk title/ID after each factual claim (e.g., [Source: Warranty.pdf §3]).

- Do NOT invent URLs, policy numbers, or dates.

- If there are conflicting passages, list both with citations and state the uncertainty.

Production best practices (2025 edition)

- Chunking that respects meaning

Prefer semantic or structure-aware splitting (headings, bullets, tables) over fixed windows.

Default: 200–400 tokens with ~10–20% overlap. Excessive overlap inflates index size and slows retrieval. Pinecone Medium

- Hybrid retrieval

Combine BM25 (exact terms) with vector similarity (semantic). When users paste error codes or SKUs, BM25 shines; for paraphrased questions, vectors win.

- Rerank for quality

Cross-encoders like Cohere Rerank v3.5 evaluate query–document pairs and reorder results by true semantic relevance—especially useful for long queries with hidden constraints (“policy for contractors in Germany after 2024”). Expect higher precision@5 and better citations. Cohere Documentation

- Graph-aware retrieval for tangled domains

If your dataset has people, teams, regulations, or storylines across many files, enrich it with entities and relations and retrieve over the graph (a.k.a. GraphRAG). It often surfaces the connecting tissue that vector-only search misses. Microsoft

- Strong metadata & filters

Store source, section, version, language, published_at. These let you filter by “latest only,” exclude deprecated docs, or prefer a region.

- Evaluation you can trust Track at least:

Containment: Was the gold answer present in retrieved chunks?

Precision@k: How many top-k were relevant?

Faithfulness: Does the answer cite and match the context?

Latency: Time spent in retrieval, rerank, generation. Create a small “golden set” of 50–200 real queries and measure weekly.

- UX matters

Show citations as footnotes or expandable chips.

Let users vote “helpful / not helpful,” and give a one-click way to view sources.

- Guardrails

Enforce “answer only from context.” For unsupported asks, return “Not in the provided context” with suggestions.

For sensitive domains, add PII scrubbing at ingest and redact before indexing.

- Cost & scaling tips

Chunk once, embed once, re-index incrementally on updates.

Keep rerank optional for cheap queries; enable it on questions tagged “complex.”

Cache answers for common queries; cache retrieval for repeated filters.

If you’re ops-constrained, pgvector inside Postgres is pragmatic and production-proven. GitHub

Common failure modes (and fast fixes)

Great answer, wrong source. Add rerank and strengthen negative prompts (“Do not infer beyond context”). Sanity-check metadata filters (e.g., region). Cohere Documentation

Hallucinated policy numbers. Require explicit citations by chunk ID and instruct the model to quote exact lines for numbers/dates; lower temperature. WIRED

Users ask for “latest rules,” but you serve old docs. Store version and published_at, and prefer newest unless the user specifies older versions.

“It depends” answers. When context conflicts, show both passages + decision tree.

Latency spikes. Reduce k at retrieval, and apply rerank only after initial similarity search; pre-warm indexes during business hours.

Multilingual users. Use modern multilingual embeddings (Text-Embedding-3 family) or maintain parallel embeddings per language. OpenAI Platform

A week-one delivery plan (checklist)

Day 1 – Scope & sample data

Pick one problem (e.g., warranty Q&A). Export 10–20 representative files.

Define success metrics (containment ≥80%, precision@5 ≥60%).

Day 2 – Ingest & chunking

Normalize to text; keep source metadata.

Start with 300-token chunks, 15% overlap; evaluate on 20 real queries. Pinecone

Day 3 – Embedding & index

Choose a current embedding model (e.g., Text-Embedding-3).

Stand up a simple index (pgvector or managed) with hybrid search. OpenAI Platform GitHub

Day 4 – Retrieval + rerank + prompt

Retrieve top 20, rerank to top 6–8, then generate with strict rules.

Add “Not in the provided context” fallbacks. Cohere Documentation

Day 5 – UX & citations

Show sources inline (e.g., [Source: DocA §2]). Add feedback buttons.

Start building your golden set from real user queries.

Day 6 – Evaluation & tuning

Measure containment, precision@k, latency.

Adjust chunk size/overlap and filters; add graph signals if docs are cross-linked. Microsoft

Day 7 – Launch & learn

Ship to a small user group.

Create a weekly “retrieval quality” report with examples and fixes.

Where RAG shines (and where it doesn’t)

Great fits:

Customer support grounded in your handbook and policies.

Internal Q&A over docs where freshness matters.

Compliance & legal summarization with citations.

Analytics explainers that translate dashboards into plain language.

Poor fits:

Open-ended creative writing without factual grounding.

Tasks that truly need model behavior changes (deep reasoning style) → consider fine-tuning later.

Conclusion

RAG in 2025 is less about buzzwords and more about repeatable engineering: clean chunks, modern embeddings, pragmatic indexing, a rerank step when needed, strict prompts, and honest evaluation. Start with one workflow, ship quickly, watch how users ask questions, and iterate. With a week of focused work, you can deploy an assistant that answers from your sources with trustworthy citations—and that’s often all it takes to transform support, onboarding, and internal search. OpenAI Cookbook Pinecone Cohere Documentation OpenAI Platform